Azure Load Balancer

Overview

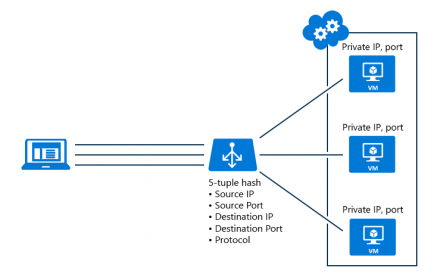

Azure load balancer is a layer 4 load balancer that distributes incoming traffic among healthy virtual machine instances. Load balancers uses a hash-based distribution algorithm. By default, it uses a 5-tuple (source IP, source port, destination IP, destination port, protocol type) hash to map traffic to available servers. Load balancers can either be internet-facing where it is accessible via public IP addresses, or internal where it is only accessible from a virtual network. Azure load balancers also support Network Address Translation (NAT) to route traffic between public and private IP addresses.

Why use a Load Balancer?

You can use Azure Load Balancer to:

- Load-balance incoming internet traffic to your VMs. This configuration is known as a public load balancer.

- Load-balance traffic across VMs inside a virtual network. You can also reach a load balancer front end from an on-premises network in a hybrid scenario. Both scenarios use a configuration that is known as an internal load balancer.

- Port forward traffic to a specific port on specific VMs with inbound network address translation (NAT) rules.

- Provide outbound connectivity for VMs inside your virtual network by using a public load balancer.

Features

Load Balancer provides the following fundamental capabilities for TCP and UDP applications:

Load balancing

With Azure Load Balancer, you can create a load-balancing rule to distribute traffic that arrives at frontend to backend pool instances. Load Balancer uses a hash-based algorithm for distribution of inbound flows and rewrites the headers of flows to backend pool instances accordingly. A server is available to receive new flows when a health probe indicates a healthy backend endpoint.

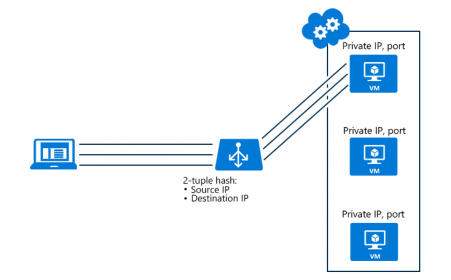

By default, Load Balancer uses a 5-tuple hash composed of source IP address, source port, destination IP address, destination port, and IP protocol number to map flows to available servers. You can choose to create affinity to a specific source IP address by opting into a 2- or 3-tuple hash for a given rule. All packets of the same packet flow arrive on the same instance behind the load-balanced front end. When the client initiates a new flow from the same source IP, the source port changes. As a result, the 5-tuple might cause the traffic to go to a different backend endpoint.

Port forwarding

With Load Balancer, you can create an inbound NAT rule to port forward traffic from a specific port of a specific frontend IP address to a specific port of a specific backend instance inside the virtual network. This is also accomplished by the same hash-based distribution as load balancing. Common scenarios for this capability are Remote Desktop Protocol (RDP) or Secure Shell (SSH) sessions to individual VM instances inside the Azure Virtual Network. You can map multiple internal endpoints to the various ports on the same frontend IP address. You can use them to remotely administer your VMs over the internet without the need for an additional jump box.

Application agnostic and transparent

Load Balancer does not directly interact with TCP or UDP or the application layer, and any TCP or UDP application scenario can be supported. Load Balancer does not terminate or originate flows, interact with the payload of the flow, provides no application layer gateway function, and protocol handshakes always occur directly between the client and the backend pool instance. A response to an inbound flow is always a response from a virtual machine. When the flow arrives on the virtual machine, the original source IP address is also preserved. A couple of examples to further illustrate transparency:

- Every endpoint is only answered by a VM. For example, a TCP handshake always occurs between the client and the selected backend VM. A response to a request to a front end is a response generated by backend VM. When you successfully validate connectivity to a frontend, you are validating the end to end connectivity to at least one backend virtual machine.

- Application payloads are transparent to Load Balancer and any UDP or TCP application can be supported. For workloads which require per HTTP request processing or manipulation of application layer payloads (for example, parsing of HTTP URLs), you should use a layer 7 load balancer like Application Gateway.

- Because Load Balancer is agnostic to the TCP payload and TLS offload ("SSL") is not provided, you can build end to end encrypted scenarios using Load Balancer and gain large scale out for TLS applications by terminating the TLS connection on the VM itself. For example, your TLS session keying capacity is only limited by the type and number of VMs you add to the backend pool. If you require "SSL offloading", application layer treatment, or wish to delegate certificate management to Azure, you should use Azure's layer 7 load balancer Application Gateway instead.

Automatic reconfiguration

Load Balancer instantly reconfigures itself when you scale instances up or down. Adding or removing VMs from the backend pool reconfigures the load balancer without additional operations on the load balancer resource.

Health probes

To determine the health of instances in the backend pool, Load Balancer uses health probes that you define. When a probe fails to respond, the load balancer stops sending new connections to the unhealthy instances. Existing connections are not affected, and they continue until the application terminates the flow, an idle timeout occurs, or the VM is shut down.

Three types of probes are supported:

-

HTTP custom probe: You can use this probe to create your own custom logic to determine the health of a backend pool instance. The load balancer regularly probes your endpoint (every 15 seconds, by default). The instance is considered to be healthy if it responds with an HTTP 200 within the timeout period (default of 31 seconds). Any status other than HTTP 200 causes this probe to fail. This probe is also useful for implementing your own logic to remove instances from the load balancer's rotation. For example, you can configure the instance to return a non-200 status if the instance is greater than 90 percent CPU. This probe overrides the default guest agent probe.

-

TCP custom probe: This probe relies on establishing a successful TCP session to a defined probe port. As long as the specified listener on the VM exists, this probe succeeds. If the connection is refused, the probe fails. This probe overrides the default guest agent probe.

-

Guest agent probe: The load balancer can also utilize the guest agent inside the VM. The guest agent listens and responds with an HTTP 200 OK response only when the instance is in the ready state. If the agent fails to respond with an HTTP 200 OK, the load balancer marks the instance as unresponsive and stops sending traffic to that instance. The load balancer continues to attempt to reach the instance. If the guest agent responds with an HTTP 200, the load balancer sends traffic to that instance again. Guest agent probes are a last resort and not recommended when HTTP or TCP custom probe configurations are possible.

Outbound connections (SNAT)

All outbound flows from private IP addresses inside your virtual network to public IP addresses on the internet can be translated to a frontend IP address of the load balancer. When a public front end is tied to a backend VM by way of a load balancing rule, Azure programs outbound connections to be automatically translated to the public frontend IP address.

- Enable easy upgrade and disaster recovery of services, because the front end can be dynamically mapped to another instance of the service.

-

Easier access control list (ACL) management to. ACLs expressed in terms of frontend IPs do not change as services scale up or down or get redeployed. Translating outbound connections to a smaller number of IP addresses than machines can reduce the burden of whitelisting.